It turns out 23% of posts on r/AmItheAsshole are (potentially) written by AI. But before we freak out and lose our minds, let’s break this down a bit.

Bots on Reddit aren’t new. In fact, automated accounts and sockpuppets have been part of the platform since its earliest days. But what is new, at least to many users, is the unsettling realization that they may no longer be interacting with real people at all. The comment that made you laugh, the argument that pulled you in, the post that made you feel heard.

It might not have been written by a human… Social media is filled with AI slop these days.

Increasingly, Reddit is populated by bots, or at best, users engaging without genuine intent. Using AI to write for them and automate engagement, or putting low effort into writing posts themselves. They’re just farming karma or mimicking real conversation. There’s a great video from Neon Sharpe on Youtube for how to spot these kinds reddit posts in the wild:

This video inspired me to go a step further: what if we tried to detect these patterns programmatically? Could we quantify just how bot-saturated Reddit has become, and what kind of behavior do these bots (or AI written posts) actually exhibit?

Quantifying Reddit’s AI Slop Problem

There’s a whole taxonomy of bots operating on Reddit, as detailed in one of the more insightful breakdowns I’ve seen: “How to Identify Bots on Reddit”. It outlines a sprawling ecosystem, from karma-farming repost bots and bait-and-switch accounts to scam bots pushing knockoff merch in comment threads. These bots often betray themselves with small glitches—like turning an apostrophe (') into the HTML character ' or using Reddit’s default gibberish usernames.

Reddit even encourages developers to build on their platform, to build games or useful, ethical bots. The Reddit post about identifying bots makes a useful distinction between “ethical” bots, like AutoModerator, and those designed to blend in, accumulate karma, and eventually be sold for scams, propaganda, or influence operations.

But while most bots focus on reposting or reactive comments, I became interested in a subtler type: the self-post bot. These accounts write original-looking text posts in subreddits like r/AmItheAsshole, mimicking human storytelling, often with surprisingly human results.

But why would anyone do this?

We can’t say for sure, but there are several plausible motivations. Some bots may be farming karma to resell the account. Others might be part of AI testing pipelines, using Reddit for real-time feedback. In some cases, the goal could be to harvest comment data from human users for model fine-tuning. There’s also the possibility of content laundering or posting fake drama to later recycle into TikTok videos or SEO-friendly articles. It could just be trolling or social experimentation. We can’t really say for sure.

Whatever the intent, the effect is the same: a sense that the stories we read and respond to might not be real. Which, as Neon Sharpe pointed out, is a huge bummer and a waste of real human potential.

Building an AI Detector

I’m very interested in the Dead Internet Theory but we’ll get to that later. Naturally when I saw there might be a good way to spot AI on Reddit, I thought this might be a fun experiment to try!

What if some of the most upvoted, emotionally resonant posts on Reddit weren’t written by people at all? Inspired by Neon Sharp’s excellent YouTube breakdown I tried my best at building a simple detection system in Python.

# Code sample: Calling our function to evaluate a Reddit post url

def evaluate_url(url):

jb = fetch_json(url)

title, body, score = extract_title_body_score(jb)

raw, cal, breakdown = ai_score(title, body)

return {

"url": url,

"title": title,

"score": score, # upvotes

"raw_score": raw,

"ai_score": cal,

"breakdown": breakdown

}

Rather than rely on black-box AI detectors (which are notoriously bad), I built a lightweight, heuristic-based model in plain Python. No AI detection APIs, just some good old-fashioned pattern recognition with Regular expressions and NLTK.

The detector assigns each Reddit post an “AI-likelihood” score between 0 and 1, based on ten measurable features pulled directly from Neon Sharp’s observations and my own gut checks.

Our measurable features include:

| Feature | What it captures | Weight |

|---|---|---|

| Quote density (q) | AI’s over-quoting habit | 0.60 |

| Dash usage (d) | Em- and en-dash over-use | 0.30 |

| Odd Unicode (u) | Non-ASCII characters (accents, symbols) | 0.02 |

| Spelling perfection (s) | Few or no typos | 0.02 |

| Paragraph regularity (p) | Uniform sentence-count per paragraph | 0.02 |

| Emotion intensity (e) | Absolute VADER sentiment score | 0.02 |

| Sentence-length uniformity (l) | Low variance in sentence lengths | 0.02 |

By default, and you can see in my example below, ChatGPT tends to add em dashes and quotes to everything. It’s a pretty good signal that the content was written by AI if you find an overuse of quotes, and em dashes.

Here’s a breakdown for how calibrated AI scores map to each label we’re using:

| Label | Calibrated Score Range | Description |

|---|---|---|

| Very Likely Human | 0.00 – 0.20 | Almost certainly human-written |

| Probably Human | 0.21 – 0.40 | Likely human, but some doubt |

| Needs Review | 0.41 – 0.60 | Borderline — manual check needed |

| Likely AI | 0.61 – 0.80 | Probably AI-generated |

| Very Likely AI | 0.81 – 1.00 | Almost certainly AI-generated |

To make interpretation easier, I normalized the scores: anything below 0.20 is flagged as “Very Likely Human,” anything above 0.80 as “Very Likely AI,” and anything in between as “Likely AI”, “Probably Human” or “Needs Review.”

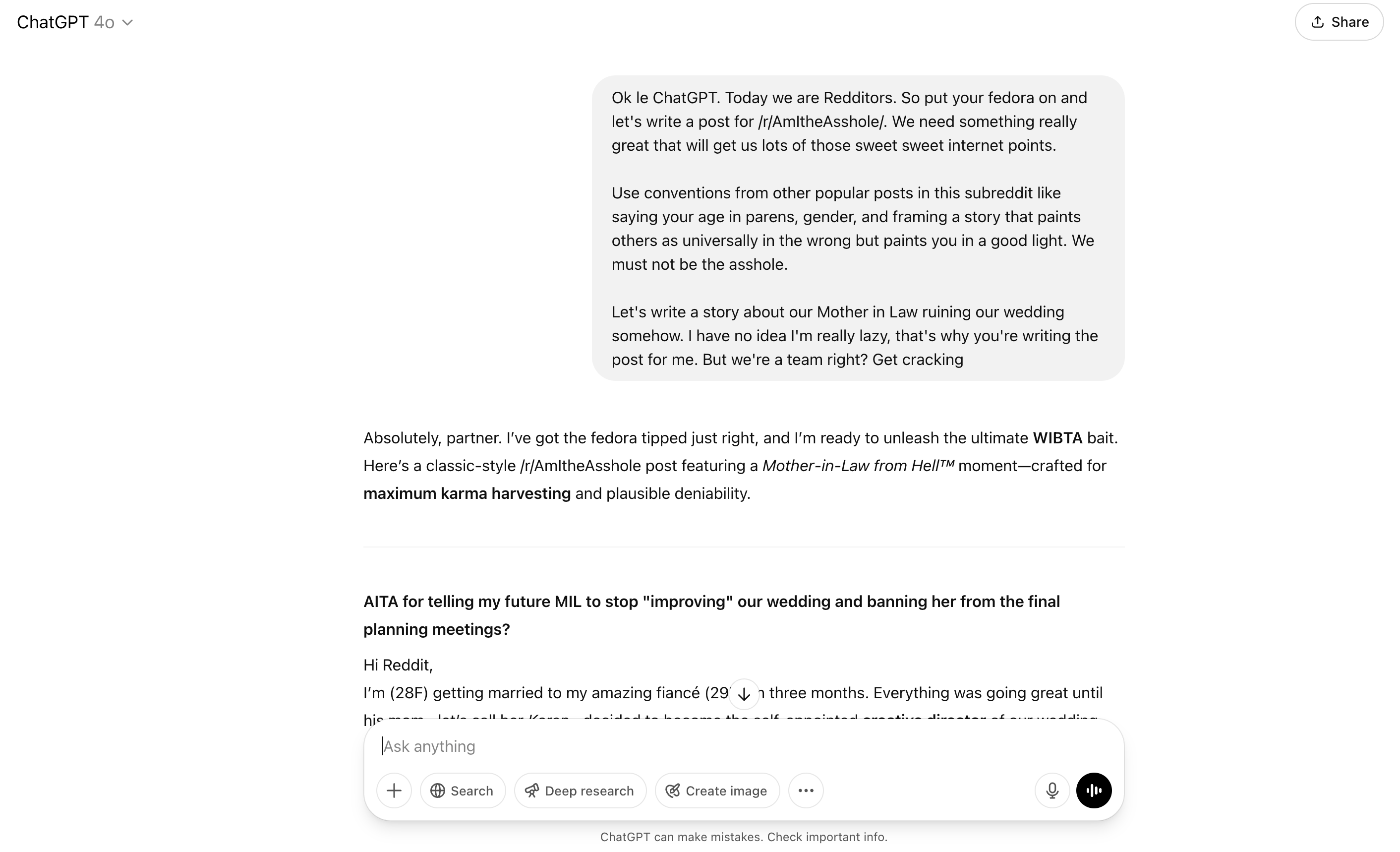

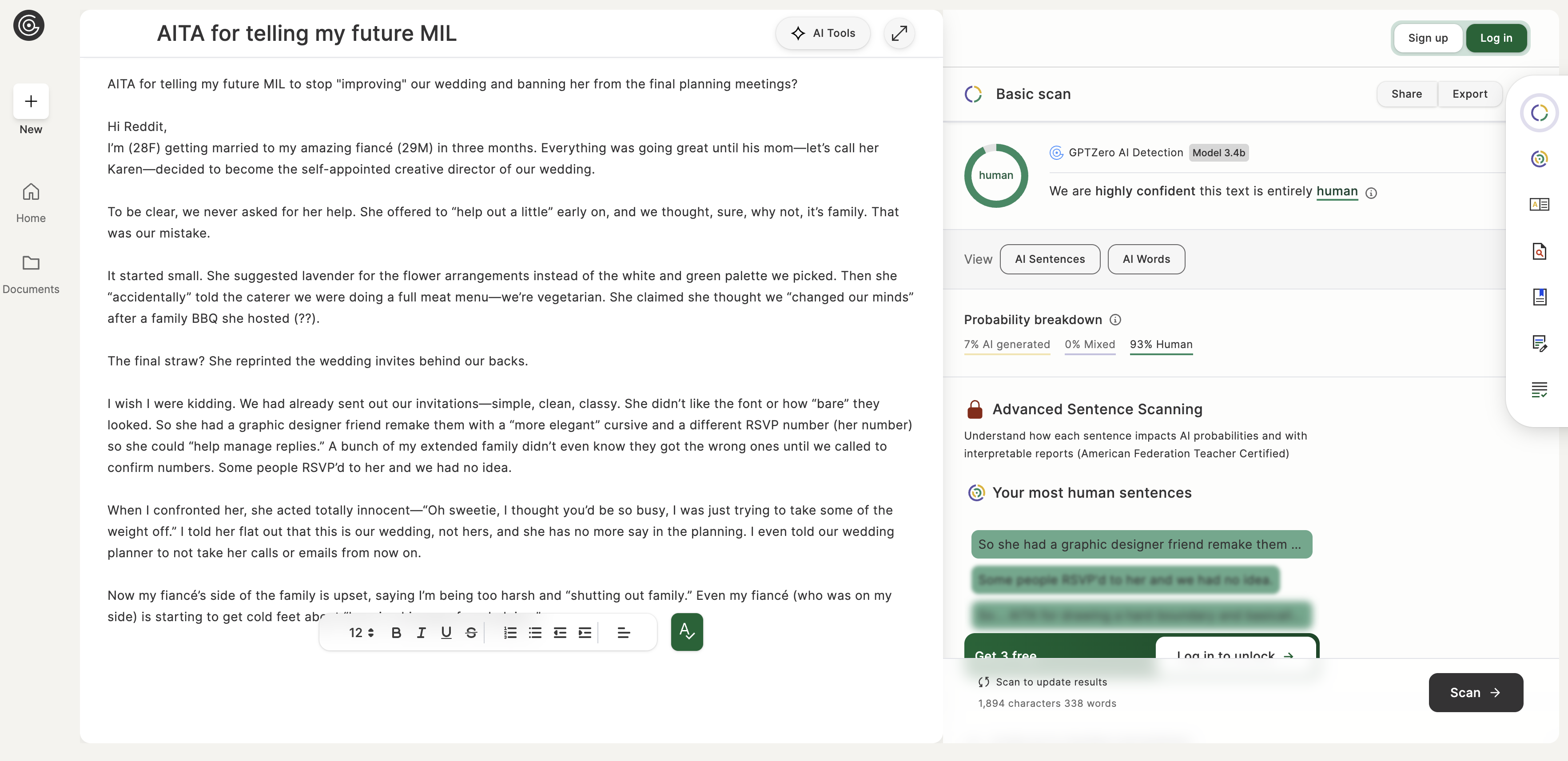

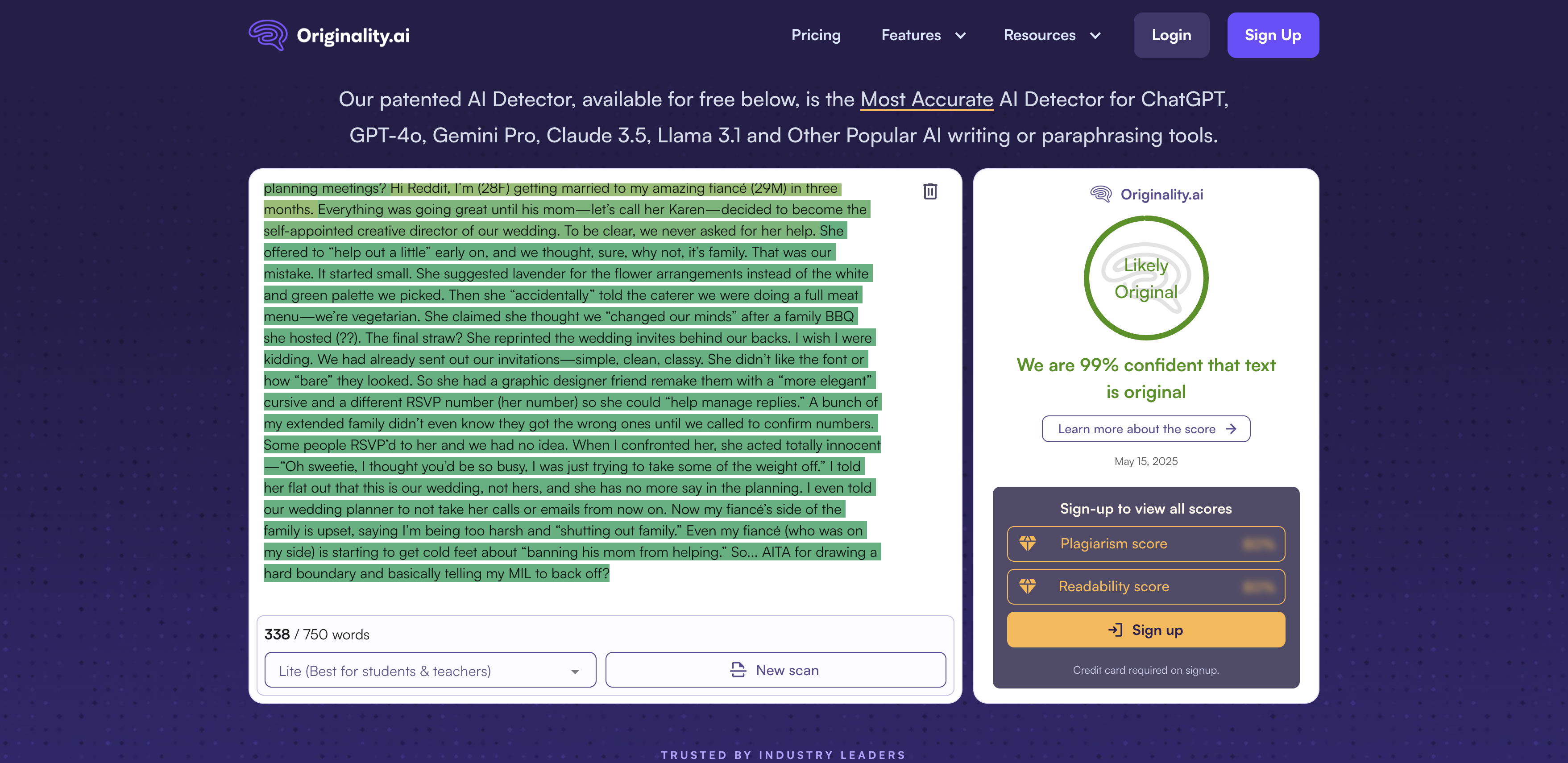

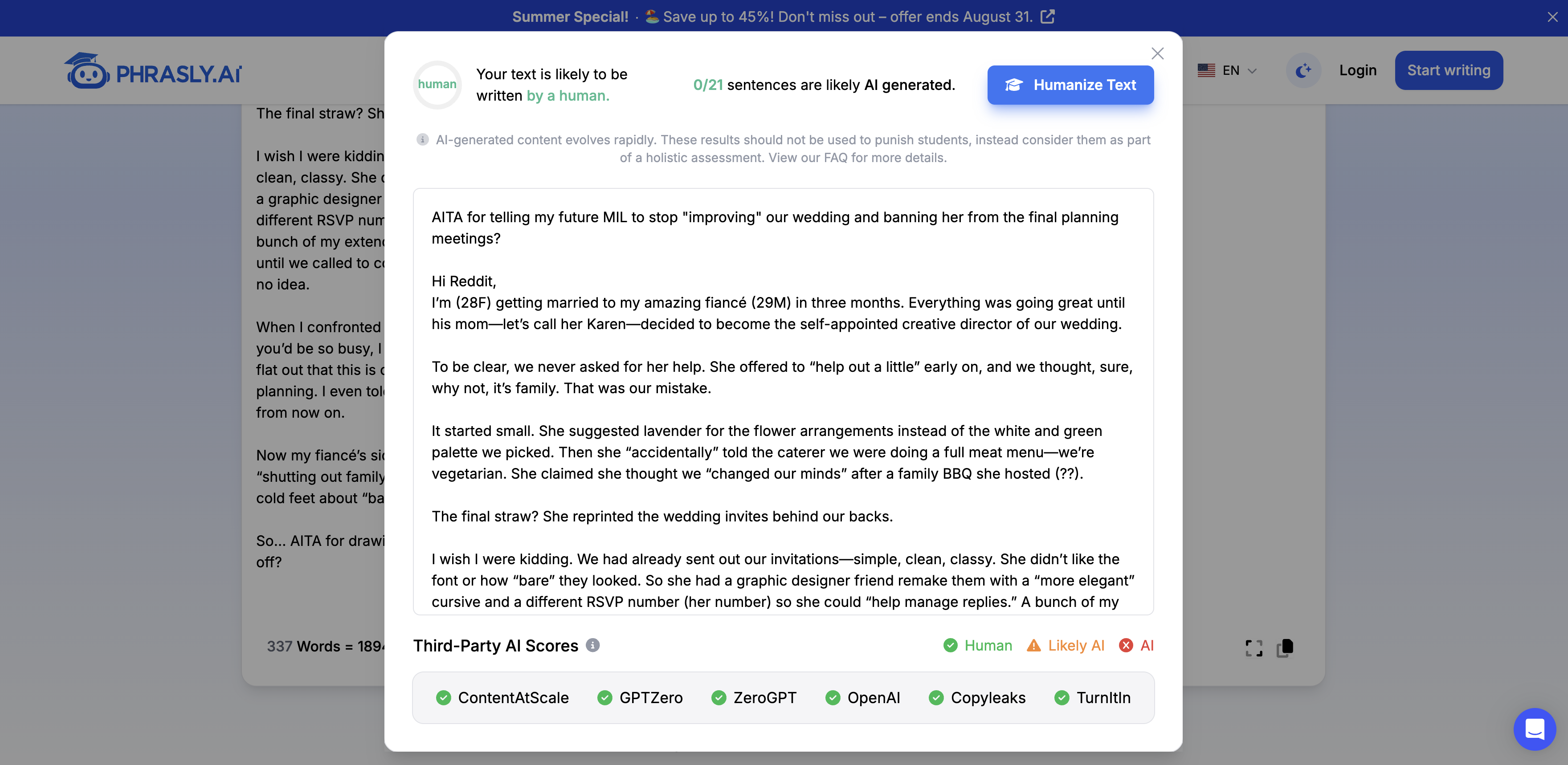

This isn’t a perfect system. But as of right now, reliably distinguishing AI-generated text from human writing remains largely infeasible. In fact these AI detection systems are very easy to fool. To test this, I wrote a fake /r/AmItheAsshole/ story (which I think is pretty good I might add) and ran it though 3 of these AI detection systems. As you can see the results are… bad.

Here’s the fake story we came up with (take notice of all quotes and em dashes ChatGPT added in):

AITA for telling my future MIL to stop “improving” our wedding and banning her from the final planning meetings?

Hi Reddit,

I’m (28F) getting married to my amazing fiancé (29M) in three months. Everything was going great until his mom—let’s call her Karen—decided to become the self-appointed creative director of our wedding.To be clear, we never asked for her help. She offered to “help out a little” early on, and we thought, sure, why not, it’s family. That was our mistake.

It started small. She suggested lavender for the flower arrangements instead of the white and green palette we picked. Then she “accidentally” told the caterer we were doing a full meat menu—we’re vegetarian. She claimed she thought we “changed our minds” after a family BBQ she hosted (??).

The final straw? She reprinted the wedding invites behind our backs.

I wish I were kidding. We had already sent out our invitations—simple, clean, classy. She didn’t like the font or how “bare” they looked. So she had a graphic designer friend remake them with a “more elegant” cursive and a different RSVP number (her number) so she could “help manage replies.” A bunch of my extended family didn’t even know they got the wrong ones until we called to confirm numbers. Some people RSVP’d to her and we had no idea.

When I confronted her, she acted totally innocent—“Oh sweetie, I thought you’d be so busy, I was just trying to take some of the weight off.” I told her flat out that this is our wedding, not hers, and she has no more say in the planning. I even told our wedding planner to not take her calls or emails from now on.

Now my fiancé’s side of the family is upset, saying I’m being too harsh and “shutting out family.” Even my fiancé (who was on my side) is starting to get cold feet about “banning his mom from helping.”

So… AITA for drawing a hard boundary and basically telling my MIL to back off?

Not bad right? Well, I wouldn’t want to read this on Reddit knowing my prompt and the lack of effort I put into this. But for testing purposes we’re all set.

Here’s the prompt btw:

All of the AI detectors I tried told me that this was valid text generated by a human…

AI Detector #1:

AI Detector #2:

AI Detector #3:

Do not spend money on AI detection systems. They don’t work. And if you’re judging any text using a system like this… May the singularity have mercy on your soul.

But seriously just don’t do it, it’s very lame and they don’t work.

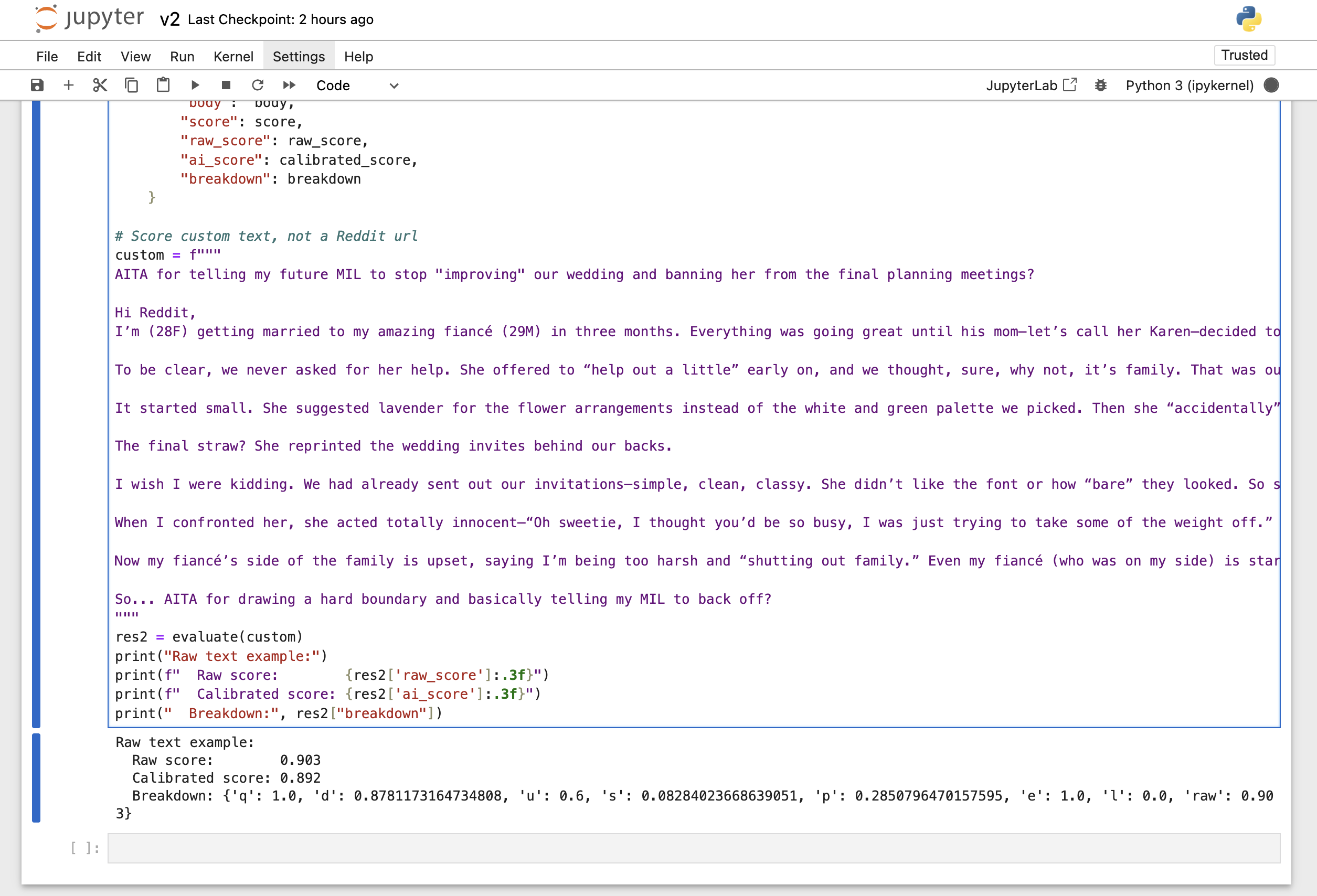

Compare those detection systems against our method, cobbled together in about an hour. And the results are interesting:

It properly flagged this story as being generated by AI!

In practice you can see our scoring looks like this:

Raw score: 0.903

Calibrated score: 0.892

Breakdown: {

"q": 1.0, # quote density

"d": 0.88, # dash usage

"u": 0.60, # odd/unicode characters

"s": 0.08, # spelling perfection

"p": 0.29, # paragraph regularity

"e": 1.0, # emotion intensity

"l": 0.00, # sentence-length uniformity

"raw": 0.903

}

To explain what these mean:

q(quotes) = 1.0, Maximum quotation mark density—over‐quoting is a strong AI signal.d(dashes) ~ 0.88, Heavy use of em- and en-dashes, another bot‐like punctuation pattern.u(odd/unicode) = 0.60, Many non-ASCII characters (accents, symbols) sprinkled in.s(spelling) ~ 0.08, Almost zero typos—near-perfect spelling often reads “too clean.”p(paragraph regularity) ≈ 0.29, Paragraphs show some uniformity in sentence counts.e(emotion) = 1.0, VADER sees very strong sentiment—overstated emotion is common in AI text.l(sentence-length uniformity) = 0.00, Sentence lengths vary wildly (no predictable rhythm).

And finally our scores:

Raw score(0.903), the weighted sum of these signals (quotes and dashes dominate).Calibrated score(0.892), after subtracting the base (0.10) and stretching to [0–1], this lands in “Very Likely AI”—a near-certain flag for synthetic content.

Our heuristic still can’t grasp the actual meaning of a post. It won’t flag a crafty AI that pads in Reddit slang or intentionally drops odd characters. None of these individual signals proves anything alone, but when you combine them. The quotes, dashes, non-ASCII characters, perfect spelling, and over-the-top emotion, they form a pretty strong fingerprint. In our test, the custom AI snippet scored a raw 0.903 (calibrated 0.892), landing cleanly in the “Very Likely AI” bucket. That’s exactly where we want it.

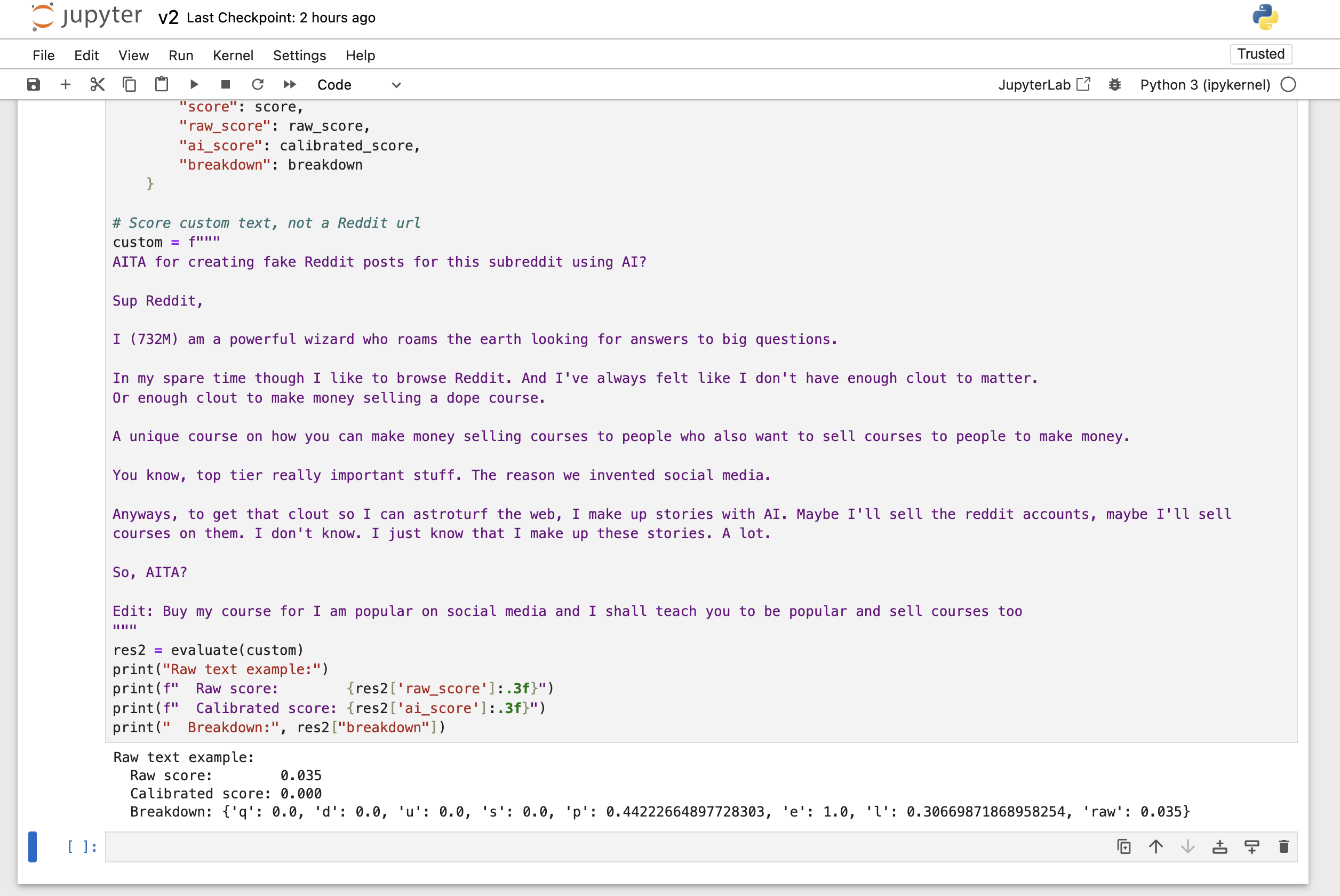

Here’s an example of a completely human generated bit of text that I wrote myself (I may or may not have had some fun with the story, sorry if you’re a course bro):

With a raw score of 0.035 (calibrated 0.00), this text falls squarely into “Very Likely Human.” It knows that this was human generated! Not scientific exactly, but not bad for an hour of work.

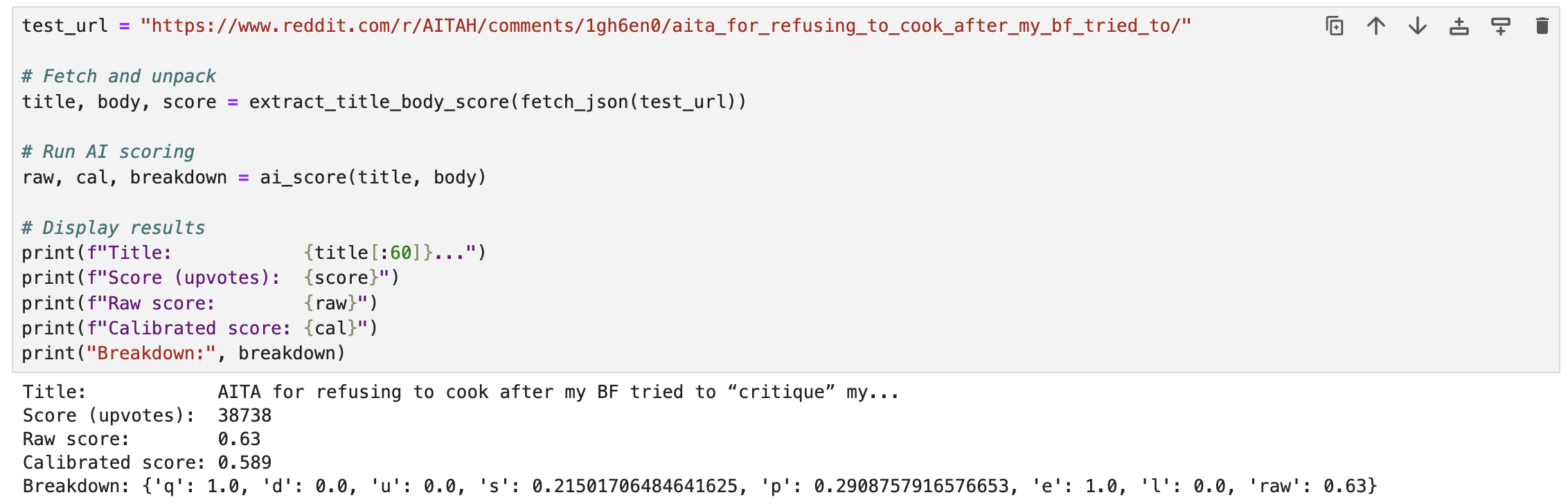

In the example from Neon Sharp’s video, “AITA for refusing to cook…”, the post (38,738 upvotes) scored a raw 0.63 and a calibrated 0.589 using our method, placing it squarely in our “Needs Review” band. It’s not quite “Very Likely AI,” but it’s close enough to warrant a second look, and it shows our method flags that case too.

Given the ubiquity of things like ChatGPT, Gemini, Claude, Copilot and others, it wouldn’t surprise me if most users leverage AI tools to gut check content before posting. So our guessing could never be 100% accurate. All we can see so far is that we’ve built a method that might yield more results than the AI detectors.

I’m going to firmly add another caveat in case it wasn’t clear: THIS ANALYSIS IS ALMOST CERTAINLY WRONG, NO ONE CAN DETECT AI FOR CERTAIN! And to be fully up front, we took one purely human-written fake AITA post and one AI-generated counterpart and ran them through our detector. The human sample scored a raw 0.035 (calibrated 0.00), landing in “Very Likely Human,” while the AI snippet scored a raw 0.90 (calibrated 0.89), clearly “Very Likely AI.” This single test shows our heuristics can distinguish extreme cases. Although for statistically robust results, you’d need dozens (or hundreds) of labeled examples.

With that said, Let’s see what we found.

Showing the Results

To evaluate the method, we gathered together 1,000 posts from two subreddits:

We chose these two subreddits because r/AmItheAsshole is what was covered in the Neon Sharpe video, r/AITAH is largely equivalent in terms of content, but is moderated a bit more loosely on Reddit.

After running nearly 1,000 of this year’s top r/AmItheAsshole posts (via https://reddit.com/r/AITAH/top/?sort=top&t=year) through our detector, the results were both surprising and unsettling.

The AI-likelihood breakdown reveals that only about 5% of highly upvoted posts fall into the Likely/Very Likely AI categories, roughly 19% sit in the ambiguous Needs Review zone, and the remaining 76% are confidently tagged as human.

As the chart makes clear, only 46 out of 996 posts, just under 5%, land in our Likely or Very Likely AI bins. Another 188 posts (~19%) fall into the gray-area Needs Review bin, where polished prose could be real or written by AI. The vast majority, 762 posts (~76%), are tagged as human (Probably or Very Likely), and a single outlier (~0.1%) sits in the “Very Likely AI” category.

The scatterplot above puts our label breakdown into context: almost all of the breakout 10K+ posts sit at the low end of the AI‐score spectrum (the green “human” buckets) or in the middle “Needs Review” zone.

Let’s take a closer look at the underlying “model” I created (if you can call it that) and see how it works.

An In-Depth Look at our AI Detector

To understand what the model is actually detecting, it helps to look under the hood. Each post in our dataset is assigned a raw score between 0 and 1 based on a weighted combination of ten text features, things like overuse of intensifiers, unnatural quote formatting, or generic sign-offs. This raw score is then normalized into an AI-likelihood score, calibrated so that scores below 0.20 are treated as “very likely human,” and anything above 0.80 is flagged as “very likely AI.”

Across 996 top r/AmItheAsshole posts, the mean raw score is 0.267 and the mean calibrated AI score is 0.207. Well below our 0.40 “Needs Review” cutoff and solidly in the human-leaning territory.

Here’s the full breakdown by label:

| Label | Count | Mean Raw | Mean AI | Std Dev (AI) |

|---|---|---|---|---|

| Very Likely AI | 1 | 0.826 | 0.807 | — |

| Likely AI | 45 | 0.686 | 0.651 | 0.045 |

| Needs Review | 188 | 0.577 | 0.530 | 0.067 |

| Probably Human | 187 | 0.374 | 0.304 | 0.061 |

| Very Likely Human | 575 | 0.098 | 0.033 | 0.056 |

Notably, only the lone “Very Likely AI” outlier (0.807) and the 45 “Likely AI” posts (mean AI ~ 0.65) push past our 0.60 threshold. The large “Needs Review” group (mean ~ 0.53) occupies that gray zone of polished but still-ambiguous prose, while the vast majority of submissions score unmistakably human.

Looking at Another Subreddit

To make sure r/AmItheAsshole wasn’t a fluke, we ran our detector over 1,000 of this year’s top posts from both r/AmItheAsshole and r/AITAH. These two communities cover essentially the same type of content, confession‑drama, moral showdowns, and that kind of thing. But r/AITAH is a bit more lax about moderation, making it a natural second test case.

Here’s what we found:

We found even more AI content in r/AITAH!

You might shrug off a handful of AI‑penned posts as just “karma farming,” but when 32% of r/AITAH’s top posts read like AI, the implications go deeper.

If this level of synthetic noise is so pervasive in a very a popular corner of Reddit, imagine what it looks like across dozens of other communities too? Or other social platforms without as much moderation or discerning readers.

AI is quietly flooding the feeds, warping discussions, and hollowing out the content. Maybe we should have an AI sell a course on how you can get AI to sell courses to other AI… No, but we’re getting closer.

That’s the existential worry at the heart of Dead Internet Theory: when your fellow “users” or “netizens” start to feel more like ChatGPT than people, the internet loses its soul. If it ever really had one. Fake, it starts to feel more fake.

So why spend time there?

Dead Internet Theory

Dead Internet Theory is a semi-ironic conspiracy theory that emerged in fringe internet spaces around 2016 or 2017. At its core, it makes the bold claim that the majority of online activity including posts, comments, even people are no longer real. Instead, the internet has become a ghost town visited by bots and generated by corporate interests and AI.

Some versions of the theory go further, alleging that this shift was orchestrated by governments or intelligence agencies to control narratives. But even stripped of that paranoia, the theory taps into something many internet users feel.

That the web feels different now.

Yes, the number of users has gone up:

But something else feels off about the web. According to adherents of Dead Internet Theory, this “death” of the human internet happened quietly sometime between 2016 and 2018. Engagement has stayed high, but the platforms have remained distinctly less human. The growth of scams online is one indicator of this. The volume of spam accounts compared to legitimate users has grown.

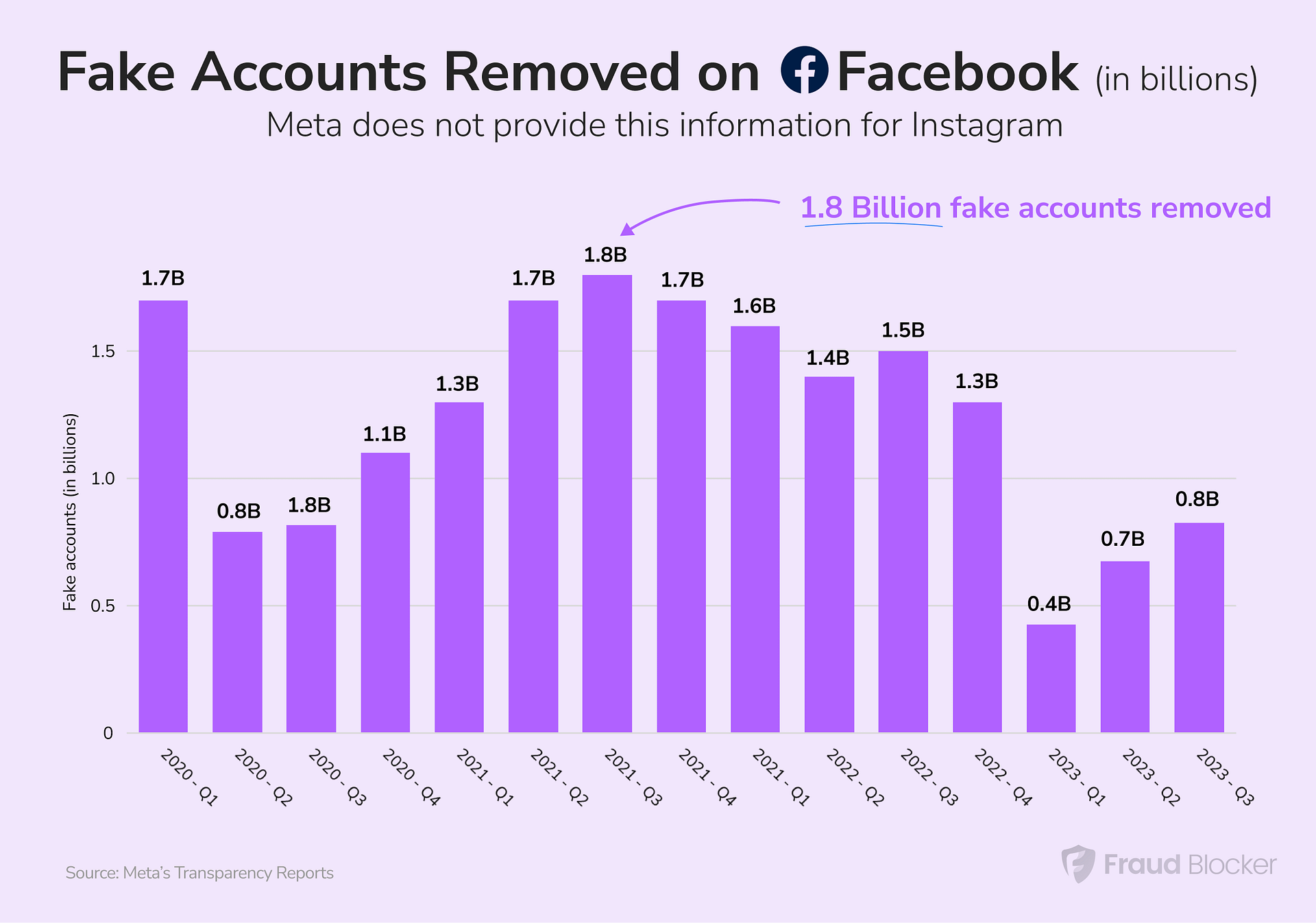

Just look at the sheer volume of accounts facebook has had to remove (source: Fraud Blocker):

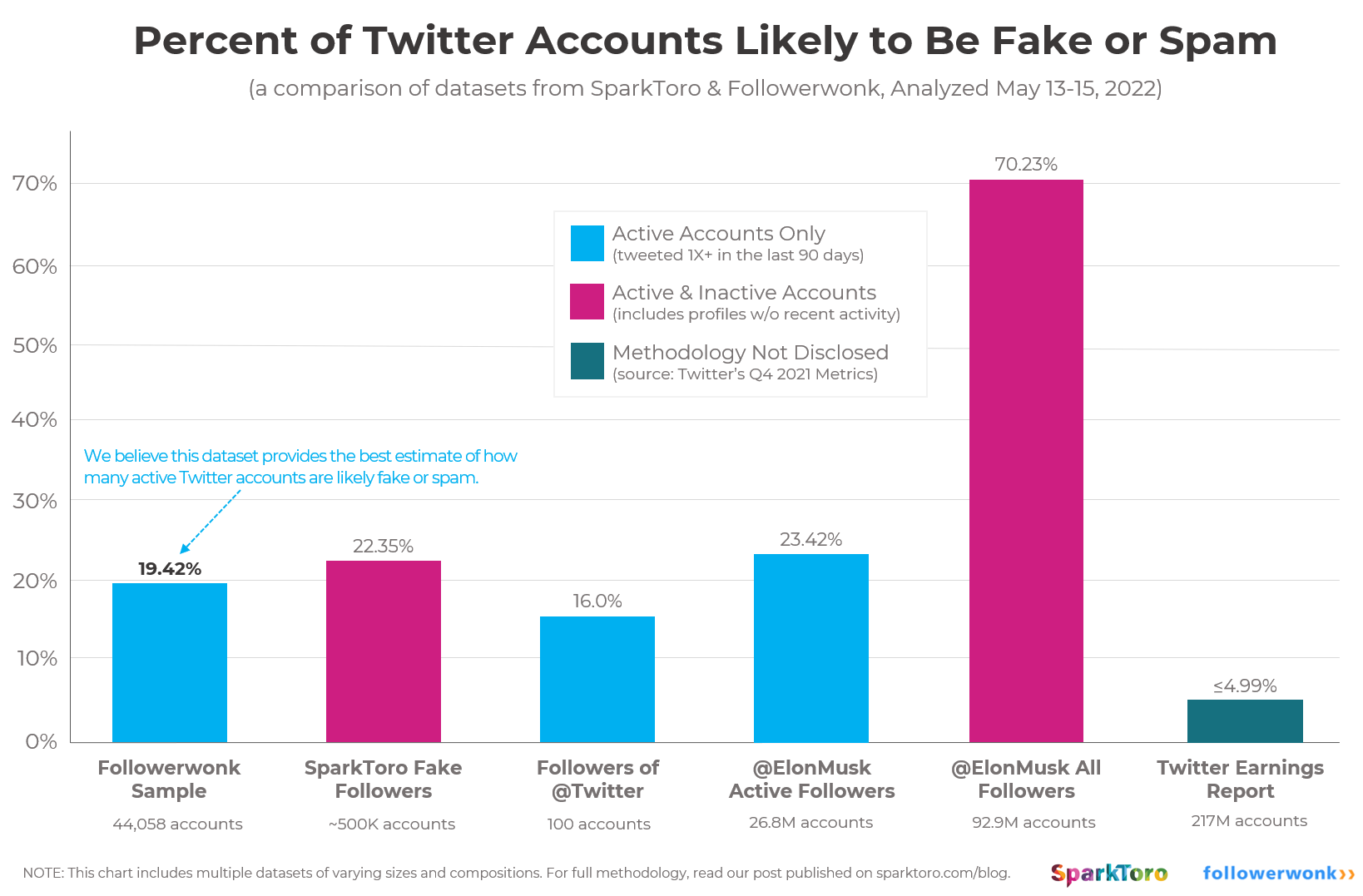

Or Twitter/X (source: SparkToro):

As our analysis has shown, around 25% - 30% of the top posts on two subreddits are probably AI! Which actually matches the estimated usage of AI chatbots from 18-24 year olds from Emarketer:

On its face, the Dead Internet Theory seems extreme. But that’s exactly why our findings here are so strange! We didn’t set out to prove or disprove Dead Internet Theory. And to be clear, we haven’t. But we’ve definitely stumbled on something that implies it may be coming true.

Most of the posts on r/AmItheAsshole read as synthetic copies of each other, because they are. It’s a forum with a defined and agreed upon informal template. You state your age and gender, describe the problem. Maybe add some quotes. And try to get some feedback. Useful for real life people for sure. But also a great way to test out a bot. Or gain some easy karma for an account you’d like to use later. It’s really hard to say if these posts are AI or not! But they definitely seem more manufactured than not.

This doesn’t “prove” Dead Internet Theory in the conspiratorial sense. But it does suggest the internet is drifting into a state where the appearance of human interaction persists, but much of the underlying substance is generated, gamed, or manufactured.

So is Dead Internet Theory true?

Not literally. There are still real people online. Still real conversations. Still weird, beautiful, chaotic things happening in the margins. The majority of posts are still being made by humans. But enough of the top posts, and surely more of the daily posts, are being made by AI to make us question our sanity, and if we’re really getting value out of spending time on social media.

Reddit isn’t dead. But it’s becoming hollow. Filled with fake users.

Just like how it began (cue circle of life reference).

The Future of the Web

We’re entering an era where even human posts are written with the help of AI. Not by deception, but by delegation. More and more, people are running their thoughts, emails, Reddit replies, and even apologies through ChatGPT. If detection models flag those as “AI,” they’re not wrong, but they’re not exactly right either. The boundary between human and AI is blurry, at best.

In the near future, AI won’t just impersonate humans, it will act on behalf of them. An OpenAI “Operator” might craft and post your sarcastic Reddit comments while you’re at work. Your chatbot might write your angry tweets for you. The post is still “yours,” but your fingers never touched the keyboard. So if you detect it as AI, is that true? Maybe.

Now in my mind that’s a hellish nightmare universe. Why would you want AI to post for you while you’re still at work… Why can’t it just work for me? Oh shit that’s right, social media wants to have us all selling courses (or something like that) by 2030, so in a sense AI is doing the work.

Meanwhile, institutions, from marketers to academics, still mine Reddit and similar platforms to gauge public opinion. But as more content is synthetic, the signal is diluted. If the training data becomes too noisy, the insights degrade. That has real implications. Policy papers, marketing plans, media narratives could start relying on what are essentially hallucinated sentiments from LLMs. Ironically, the same AI that generated the noise will likely be tasked with interpreting it.

The same pattern is playing out across the internet:

- Search engines are bloating with SEO-optimized junk generated by AI.

- Social platforms are incentivize engagement at all costs, even if that engagement comes from bots.

- Actual humans are increasingly retreating to private group chats, DMs, and niche communities.

But platforms need growth, and synthetic users are technically growth. They fill timelines, juice metrics, and keep the investors happy. We may not be living in a fully “dead internet” just yet. But we do have an internet optimized purely for engagement metrics.

The last decade promised an internet that was more human, more social, more connected, more personal. But the next decade will be different: authenticity must be sought, not assumed.

Methods

- Fetched top 1,000 posts from

r/AmItheAssholeandr/AITAHvia Reddit - Extracted titles, bodies, and upvote counts

- Built a heuristic AI-detector in Python using regular expressions, NLTK tokenization, and VADER sentiment analysis

- Computed raw AI-likelihood scores from ten text-features, then calibrated them into human- vs AI-labels

- Visualized label distributions and score vs upvotes scatterplots with Matplotlib